You Can’t Ping Me If I Don’t Exist

It started with a simple Facebook post.

Someone from our apartment building’s group chat mentioned that their internet was down. I was working from the office that day and casually checked my own self-hosted services. Nothing loaded. So I did what every normal person does in this situation: opened a terminal and started pinging my home server.

Nothing. Not even a timeout.

And so began the "digital forensics expedition".

Hello? Is Anyone Out There?

I couldn’t reach anything at home - not my IP, not my self-hosted domains, not even basic ping replies. So I did some DNS checks. dig myispdomain.com returned... nothing. ping ns1.myispdomain.com and ns2.myispdomain.com? Also dead.

I even did a traceroute to their DNS IPs - and saw it also lying on its back right near their whole network. Something big was broken.

Facebook groups and tech chats started lighting up with people confirming: the ISP was down. Entire chunks of Lithuania’s internet went dark.

Something, Somewhere, Somehow

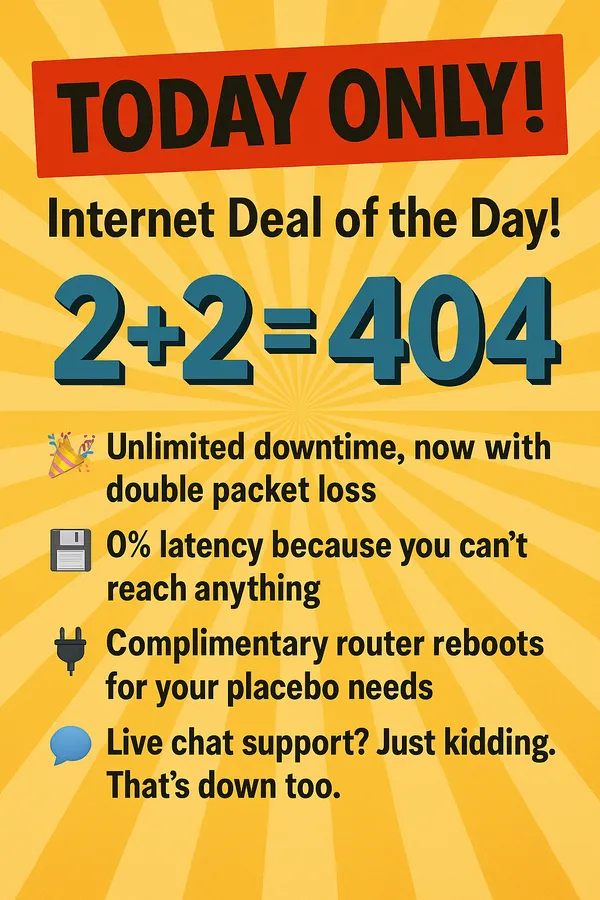

While the provider never gave a full technical explanation (at least at the moment of writing this), I completed my "autopsy without a body" and here’s where 2+2 led me:

- Both authoritative DNS servers became unreachable.

- Their WHOIS-listed contact email didn’t even exist.

- Routing to customer IPs failed - traceroutes dropped mid-way.

- Their phone support line was unreachable.

- Their website was also down for hours.

- Basically, every part of their "contact us" plan relied on a working network. That's... brave, I guess.

It was a full-blown infrastructure collapse - so deep that they couldn't even imform their own clients about it. Their client management system, phones, and communication channels were all tied to the same internal network that went down.

This Is My Hobby Now, I Guess

As the hours dragged on, I:

- Wrote a bash script to continuously ping my home domain and log the results.

- Notified the registrar before realizing the ISP hosts their own DNS.

- Ran WHOIS on IP blocks and found real human contacts.

- Even emailed them about their broken WHOIS email and the ongoing outage (the irony).

I had the bash script running non-stop from my phone, logging every heartbeat (more of a flatline tough) all day and thought the night.

The next day, the WHOIS entry was updated with a proper contact. They replied to my technical email, thanked me for my observations, and explained they were dealing with a massive outage. Although my email was clearly late to the party yesterday 😅

Facebook Became the Helpdesk Now

The ISP's Facebook became the emergency status page. Some highlights:

“[...] This includes internet, phone, and surveillance systems. We’ve lost access for over five hours and no one is answering your phones.”

— A frustrated business client

“Phones aren’t turned off — they just don’t work, because they also rely on internet.”

— ISP representative on Facebook

“First thought: maybe they went bankrupt 😅”

— A concerned user

“This is the first time in the company’s history we’re experiencing a failure of this scale.”

— ISP support representative

They didn’t just lose internet service - they lost their own ability to communicate that service was lost. It’s like your fire alarm and fire extinguisher being on fire, ugh...

This wasn’t just a network hiccup - this was a total and real infrastructure collapse.

It’s Alive! Wait, Nope.

Late in the evening, I got a single:

Ping successful!

...and then it was gone again.

Looks like they were rebooting their systems, testing routes, and slowly bringing things back online. The poor support team must have answered hundreds of emails with the same copy-paste.

Everything Worked, Except the Part That Didn’t

And that part just happened to be the Internet. Yeah, I am self-hosting some stuff, but nothing mission-critical broke for me, but it was a reminder of how much self-host can rely on one ISP:

- ❌ My services were LAN-only

- ❌ VPN access was gone

- ❌ My blog was down

- ❌ A small site I host for someone else was also offline

That said, my local "intranet" still worked - I could watch movies, access documents, and use my services locally across devices. This is one of the reasons I self-host :) The world can fall apart, but inside my tiny bubble - everything still runs.

The Return (Mostly)

At exactly 08:21:48, after nearly 20 hours of complete silence, the first successful ping came through!

For a few minutes, everything was up. Then it started to act drunk - momentary drops, failed pings, websites timing out. Eventually, around 08:30, things stabilized for good.

Watching "Ping successful!" repeat in my terminal window felt like a small personal victory. I already half-ready to drive to the office for work, and now I could enjoy my day of WFH. I was rooting for my ISP the whole time 😄

Final Thoughts

To be fair, this provider had always been reliable before this. This was their first major outage in years. But it was also a reminder of just how fragile internet infrastructure really is.

Even DNS, phones, websites - they all rely on the same critical infrastructure. And when that goes down? Everything goes.

I didn’t ask for a refund or a discount. Honestly, I felt kinda bad for them - they’ll need every euro they can get to recover from this one. Imagine the B2B hell they're going through right now - how many SLA have they broke. Hope they won't go bankrupt from SLA contract compensations...

Until next outage.